Part II - Compression of knowledge

This is the second post in the AI snapshot series about LLM's

So we have arrived at Large language models. They are different kind of animal when it comes to information history we explored in Part I. It is not like a next generation search. And it is not like a new type of information. It’s different.

Why we would want to understand them? Because we are about to delegate (some) thinking to them.

Generative models

Let’s start with generative models, which many people have encountered through tools like ChatGPT and are most familiar with.

Think of these models as a knowledgeable friend who never starts a conversation but always has an answer when you ask something. This friend can be a bit weird—sometimes their answers are spot on, and other times they don't make sense. They don’t always care about being 100% accurate; they just aim to give you the most likely response based on your question.

For now, their answers are only as good as the questions you ask.

They’re trained to predict the next word in a sentence, over and over, until they get really good at it. For example, if you say "The quick brown fox jumps over the...", the model knows the most likely next words are "lazy dog" because it has seen this phrase many times during training.

Financial times has fantastic visualisation to demonstrate how these model work - https://ig.ft.com/generative-ai/

The model doesn’t truly understand language like we do. Instead, it’s like it has memorised patterns of words that often go together. When you give it a prompt, you give it a seed to generate a response that fits the piece of pattern you just gave.

If you ask a simple question, you get (an average) simple answer. Ask something more detailed, and the average answer gets more detailed too, because you have added more context. Classic GIGO - garbage in, garbage out.

These models are good at reasoning. The problem is to have right data to reason about. This brings us to embedding models, which help solve the problem of finding the right information to start with.

Embedding models

Embedding models are quite different from generative models. While generative models always "have an opinion" and generate a response, embedding models don't try to reason or provide direct answers. Instead, they excel at capturing how different pieces of information are related to each other.

In Part I, we used the analogy of a librarian to describe Google Search. Embedding models are like a different type of librarian, one who doesn’t search by keywords or use algorithms like PageRank. Instead, this librarian organizes all the information based on how similar or related the items are to one another—using what can be thought of as an invisible, multi-dimensional map.

For us, humans, it’s difficult to visualize information spread across thousands of dimensions because we typically think in three physical dimensions, with time as a fourth. However, embedding models can capture the relationships between pieces of information in many more dimensions. This allows them to understand subtleties in the data that are beyond our natural comprehension.

To understand how these models work, imagine a huge, chaotic library where books are initially scattered randomly. During the training process, the librarian learns where items should be placed by seeing countless examples. For instance, it might see a sentence like, "The quick brown fox jumps over the lazy dog," and learn to associate "fox" with words like "dog," "wolf," or "animal," and "dog" with words like "cat," "pet," or "animal." Each time the librarian makes a guess about where to place these items, it receives feedback and adjusts its internal map accordingly.

Over time, the librarian develops a nuanced understanding of how words and concepts relate across different dimensions. For example, it learns that "Paris" is closely related to "France," "Eiffel Tower," and "city," not because it understands why but because these terms frequently appear together. This allows the librarian to organize and retrieve information based on meaning, rather than just keywords.

Real-World Use of Embedding Models

So how does this work in the real world? Imagine you have a database with thousands of customer reviews—some good, some bad, and, of course, some ugly. In the past, you might have needed a machine learning engineer to manually develop tools to categorise these reviews, which involved using various natural language processing techniques and training specific models to capture the meaning of the text.

With embedding models, the process becomes much simpler. Because the model already has encapsulated certain world view. Now, it can be as simple as typing in a word like "anger," and the model will return reviews that are close to idea about “anger”, even if the word "anger" is not explicitly mentioned. It understands the underlying meaning and context because it has learned the relationships between words, concepts, and emotions.

And it doesn’t stop there. Embedding models can also be multimodal, meaning they can understand both text and images in the same space. For example, you could embed a picture of an angry person and ask the model to find reviews that match the sentiment in the expression. This is possible because the model has learned to map both visual and textual information into a common space, allowing it to compare the underlying meanings directly, regardless of the format.

Hare is a funny project to search visual faucets based on keywords like birds (around 24th minute).

And here is really interesting project related to music -

https://galaxy.spotifytrack.net/

The Magic Behind the Simplicity

The underlying ideas behind these models are simple. Pattern recognition. It might make it seem like there should be more to it. And indeed, while the concepts are easy to grasp, the real complexity lies not in the algorithms themselves but in the data and the technical infrastructure that supports them. These models are trained on an immense amount of data drawn from a wide variety of sources, including books, websites, articles, and more. This vast volume of data provides the models with a rich and diverse set of patterns to learn from. As we discussed in a previous post about the history of information, we’ve reached a point where we produce digital information in enormous quantities, constantly digitizing knowledge about our world.

Another crucial component is the ability to scale this training process. The algorithms are designed to be trained in parallel across thousands of computers, allowing them to process and learn from vast amounts of data in a reasonable timeframe. This combination of extensive data and scalable computing power is what enables these models to achieve such impressive results.

However, it’s important to remember that despite this complexity, these models don’t possess inherent understanding or reasoning skills—they don't "know" in the human sense. They simply calculate probabilities based on the patterns they’ve learned.

It's a bit sad and confusing that LLMs ("Large Language Models") have little to do with language; It's just historical. They are highly general purpose technology for statistical modeling of token streams. A better name would be Autoregressive Transformers or something.

They don't care if the tokens happen to represent little text chunks. It could just as well be little image patches, audio chunks, action choices, molecules, or whatever. If you can reduce your problem to that of modeling token streams (for any arbitrary vocabulary of some set of discrete tokens), you can "throw an LLM at it".

Through an extensive series of over 1,000 experiments, we provide compelling evidence that emergent abilities can primarily be ascribed to in-context learning. We find no evidence for the emergence of reasoning abilities, thus providing valuable insights into the underlying mechanisms driving the observed abilities and thus alleviating safety concerns regarding their use.

Are Emergent Abilities in Large Language Models just In-Context Learning?

The missing piece

ChatGPT was launched at the end of 2022—almost two years ago. Since then, investors have poured significant funding into "AI-first" applications, but where are the results? Where is the promised AI future?

We currently have two powerful AI tools. One is an intelligent librarian that can map and organise information but doesn't give direct answers. The other is a chatty friend that can talk about anything, but doesn't know everything. It feels like there’s a missing piece.

That missing piece is an agent. Right now, we humans act as the agents, bridging the gap between these two tools. You’ve probably had an experience like this: you open ChatGPT and think, "Wow, this thing is incredibly smart. I can ask aaaanything." But then you ask something too vague, like "How do I become rich?" or too specific, like "What is the value of my cousin’s company?" and realise it doesn't know without additional context. So, you do some copy, pasting magic and suddenly, the response is much better. In this scenario, you're acting as the intermediary, providing context and direction to get useful results. this can be delegated to an agent.

For AI to truly work seamlessly, we need to connect the librarian’s ability to organise with the conversational skills of our chatty friend, and that’s exactly the role of agents. In 2024, agents have become all the hype, but developing them isn't as straightforward as it might seem.

The idea behind agents is simple: they need to fetch data, make decisions based on that data, and use it to accomplish a specific goal. But achieving this requires overcoming several roadblocks, which explains why our bright AI future isn't fully realised. Yet!

The first challenge is that people don't know what they don't know. We aren’t accustomed to thinking in terms of vectors, how to transform information for effective embedding-based queries and how to retrieve that information through prompting. We’re still learning how to integrate, store, and use this kind of knowledge effectively.

Another challenge is infrastructure. In our earlier example of customer reviews, nowadays that data is stored in a relational database, not a vector database. And that’s fine—relational databases are still useful and not going away. Vector representations simply provide a different view of the same data, enabling more context-aware retrieval. But the adoption of vector databases and understanding how to leverage them is still nascent.

Delegating tasks to AI is also far more complex than it seems. It’s one thing to work through a prompt iteratively until you get a satisfactory result, but entirely another to trust AI to carry out repetitive tasks reliably. You probably have had a frustrating experience where the model just wouldn’t quite do what you intended. Now imagine relying on such a system to perform the same task but on scale. Scaling AI work requires a different approach—one that includes the ability to conduct systematic testing, refine task definitions, and ensure reliability.

For AI to achieve its full potential, agents must seamlessly bridge these gaps. They need the infrastructure to support diverse data formats, the knowledge to efficiently use vector search, and the consistency needed for real-world, repetitive tasks. This is the challenge we must solve if we want AI to genuinely augment our capabilities rather than remain an impressive but limited toolset.

I like how Alex from Anthropic talks about this at the beginning of this presentation and comparing how transition from steam engines happened to electricity. The true power comes when you build new factory from scratch around the new technology.

Metrics

Now that we have looked at these models and how they work, we can revisit our metrics and see how this new information technology compares.

Latency. These models are close to real time. For now. Most likely it will slow down when we will start to delegate more complex tasks to agents. It still will be fast compared to human speed but deep research on a topic won't come back in couple of seconds.

Novelty. This also changes. These models either contain existing information or they react on your input. If you ask dumb thing with unreliable information, it will answer some shit back. Garbage in, garbage out. Then again, you can ask weird questions like “What human emotion has no name”, “How could I apply machine learning to raising teens in foreign country” or explore some scientific topic from different angle. It will have an opinion about everything. Sometimes it is novel. Sometimes it is garbage. You decide.

Authority. The "truth" is captured in the model. It might think like Golden gate bridge. It might hate white man, black women or have right wing/ leftist incline. You really don't know. Particularly with generative models. You don't know why they make such a decision.

Precision. This we have lost. There is no single piece of truth or source. We cannot trust any piece of information because we don't know how much an AI is in it. A lot of people consume information on social media and they are literally driven by algorithms.

The good parts

While some of this may sound doom and gloom, there are major positives.

First, this technology is accessible to anyone. You no longer need vast datasets or expensive compute power to benefit from AI. Platforms like Huggingface offer small, pre-trained models (they recently crossed 1m mark) that you can fine-tune for your specific needs—whether that’s sorting customer applications, summarising reports, or generating mockups. All you need is a solid understanding of your problem and a few good examples to guide the model.

This accessibility is transformative. In the past, only big companies with deep pockets could afford to leverage this kind of technology. Now, anyone can. This shift democratises AI, giving individuals and smaller organisations the power to create solutions that used to be exclusive to tech giants.

With more people having access, the power dynamic is changing. For years, big platforms have controlled what we see through algorithms optimised for eye balls and dopamine. Now, that control is loosening, and the potential for users to shape their own experiences is growing.

We’ll see.

What do you want?

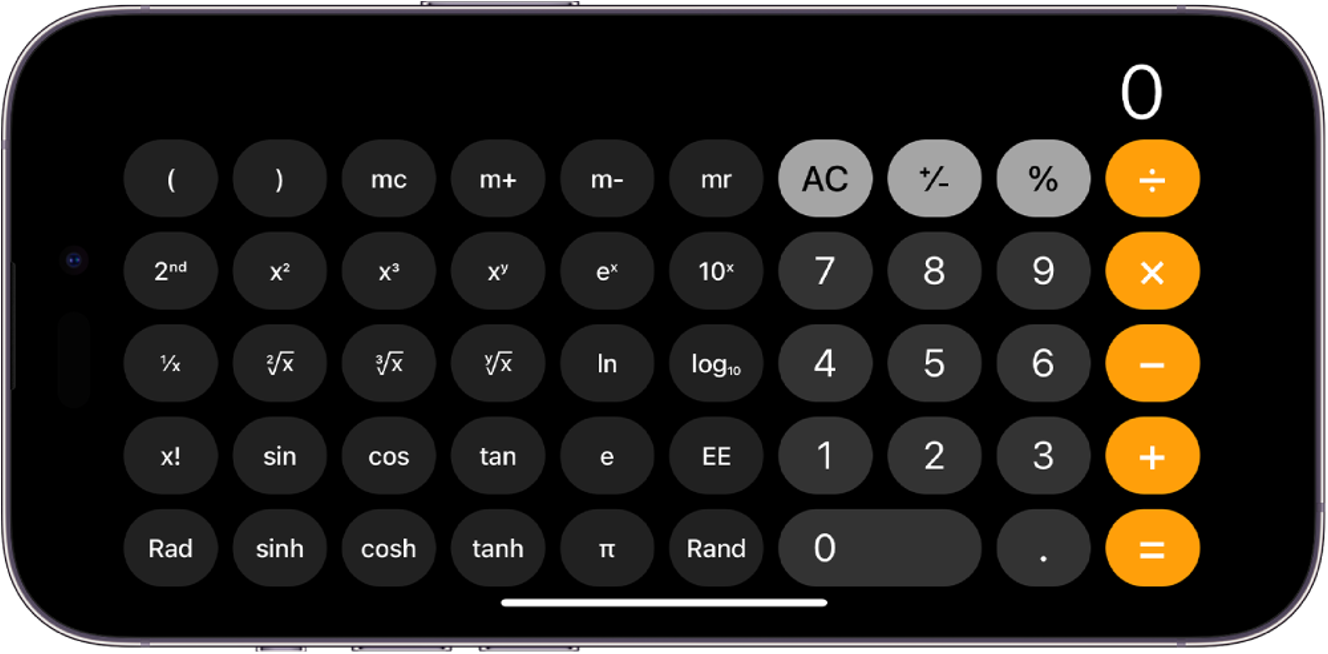

In the past you had to be a trained mathematician to perform advanced calculations. Then came the calculator. Now, anyone with a cell phone can perform complicated calculations right from their pocket.

But having a calculator doesn’t mean you can build a rocket ship. A calculator is essential to the process, but it’s not enough on its own. The same can be said of information and the internet. The internet promised to bring us all the knowledge in the world and eliminate illiteracy, empowering anyone to become whoever they want to be. Yet, instead of building on this potential, we end up sharing cat memes and hanging out on flat-earthers communities.

Large language models are just tools, like calculator or internet. It is how we use this “hammer” what matters. Models have no agenda of their own. Just as a calculator can perform any calculation you ask of it, these models can answer any question you pose. But they cannot determine what to ask—that’s up to you, homo sapient.

What exactly do you want to accomplish? This is one of the fundamental questions we must answer if we are to use these tools effectively. If we intend to delegate parts of that agenda to a machine, we need to be crystal clear about our goals and capable of communicating them precisely.

This is the topic of the Part III on the future of work—Master of Puppets.

And some reading list: